Chapter 6 Regression for Count Data

So far, we have fitted regression models for continuous-valued quantitative response variables. What if our response variable is really count data – discrete quantitative values limited to zero and positive integers?

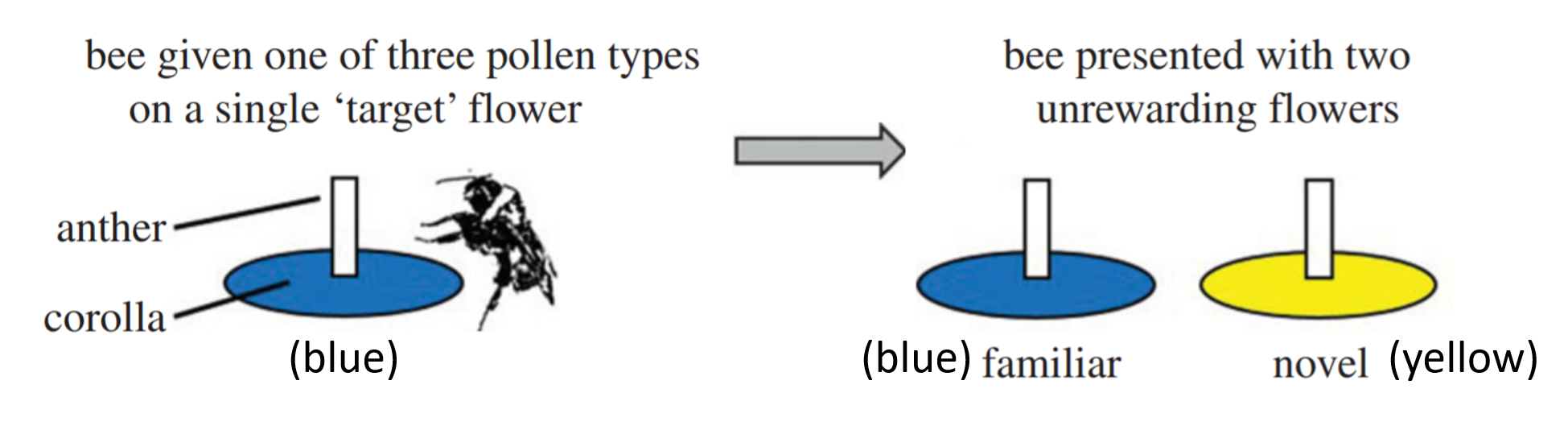

6.1 Data Source

The dataset used here is beevisits, from:

Felicity Muth, Jacob S. Francis, and Anne S. Leonard. 2017. Bees use the taste of pollen to determine which flowers to visit. Biology Letters 12(7): DOI: 10.1098/rsbl.2016.0356. http://rsbl.royalsocietypublishing.org/content/roybiolett/12/7/20160356.full.pdf.

6.2 A bad idea: multiple linear regression model

## colony beename treatment target.colour

## W:126 giantbeeYsucroseyellow: 3 Quinine :90 blue :138

## X: 66 o51Wsucroseblue : 3 Cellulose:90 yellow:132

## Y: 78 o54Wquinineblue : 3 Sucrose :90

## o58Wcelluloseyellow : 3

## o60Wcelluloseblue : 3

## o63Wquinineblue : 3

## (Other) :252

## novisits flower

## Min. : 0.00 familiar:90

## 1st Qu.: 1.00 novel :90

## Median : 3.00 target :90

## Mean : 4.44

## 3rd Qu.: 7.00

## Max. :21.00

## ##

## Call:

## lm(formula = novisits ~ flower + treatment + colony, data = beevisits)

##

## Residuals:

## Min 1Q Median 3Q Max

## -6.567 -2.111 -0.285 1.567 11.577

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.111 0.444 4.76 3.3e-06 ***

## flowernovel -1.678 0.439 -3.83 0.00016 ***

## flowertarget 5.456 0.439 12.44 < 2e-16 ***

## treatmentCellulose 2.688 0.439 6.12 3.3e-09 ***

## treatmentSucrose 2.724 0.441 6.17 2.6e-09 ***

## colonyX -0.832 0.449 -1.85 0.06511 .

## colonyY -1.849 0.425 -4.35 2.0e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 2.94 on 263 degrees of freedom

## Multiple R-squared: 0.576, Adjusted R-squared: 0.567

## F-statistic: 59.6 on 6 and 263 DF, p-value: <2e-16

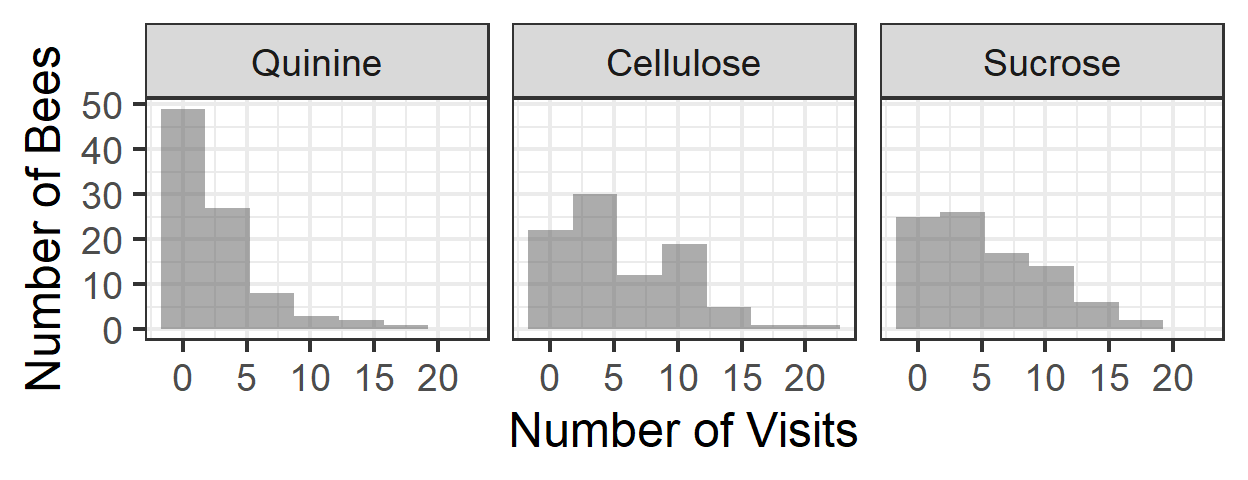

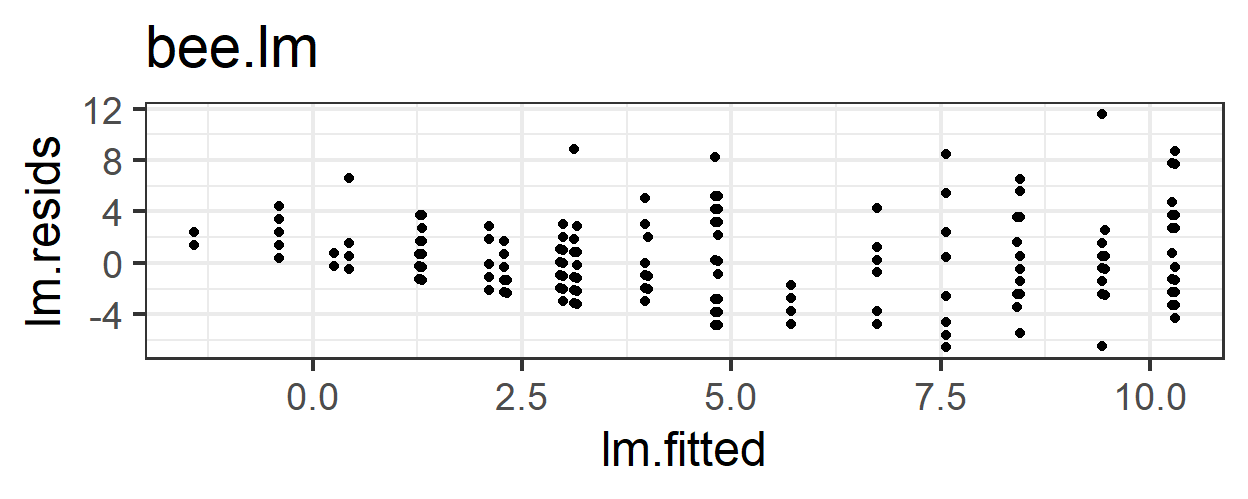

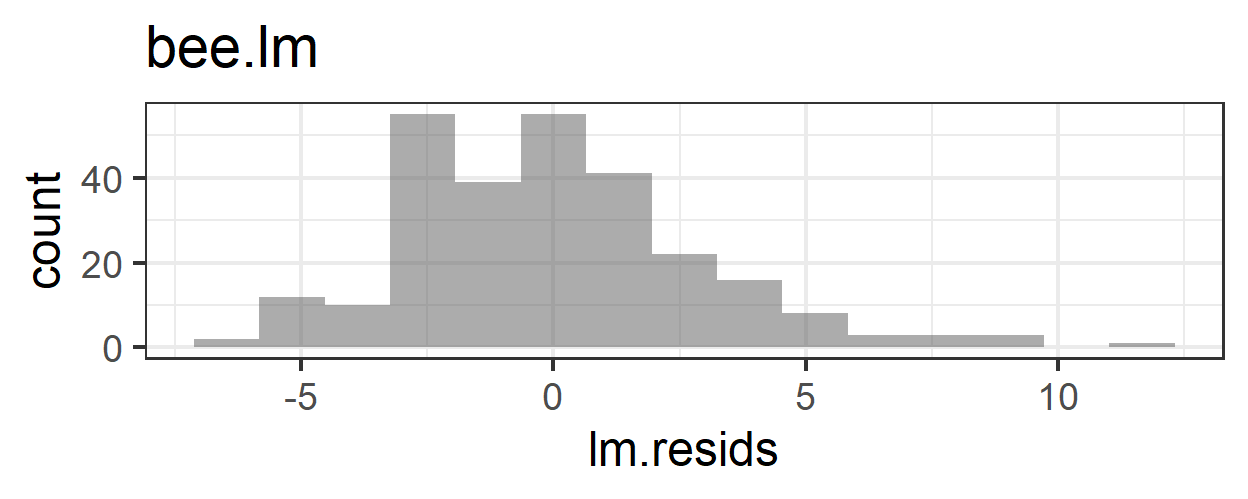

6.3 Problems with the linear model

What problems do we have with this model (its appropriateness, or goodness of fit to the data)?

- Non-constant error variance

- Non-normality of residuals

- Some predicted values are less than 0 – it’s impossible that a bee could visit a flower a negative number of times!

6.4 Poisson Regression

Detailed notes on the model equation were filled in here in class

6.4.1 Fitting the Model

prm <- glm(novisits ~ flower + treatment +

colony, data=beevisits,

family=poisson(link='log'))

summary(prm)##

## Call:

## glm(formula = novisits ~ flower + treatment + colony, family = poisson(link = "log"),

## data = beevisits)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -2.994 -1.312 -0.386 0.779 4.702

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 0.7905 0.0869 9.09 < 2e-16 ***

## flowernovel -0.7507 0.1044 -7.19 6.5e-13 ***

## flowertarget 0.9994 0.0692 14.45 < 2e-16 ***

## treatmentCellulose 0.6982 0.0791 8.83 < 2e-16 ***

## treatmentSucrose 0.7095 0.0797 8.91 < 2e-16 ***

## colonyX -0.1752 0.0718 -2.44 0.015 *

## colonyY -0.4372 0.0731 -5.98 2.2e-09 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for poisson family taken to be 1)

##

## Null deviance: 1236.33 on 269 degrees of freedom

## Residual deviance: 542.05 on 263 degrees of freedom

## AIC: 1261

##

## Number of Fisher Scoring iterations: 56.4.2 Conditions

What conditions must hold for this model to be appropriate?

- Response variable (y) contains count data

- Linearity: \(log(\lambda_{i})\) is a linear function of the covariates \(x_1\), \(x_2\), … \(x_n\). (Later we will consider how to check this when some covariates are quantitative…in brief: plot log(rate) as a function of each covariate and look for (no non-)linear trend.)

- Mean = Variance

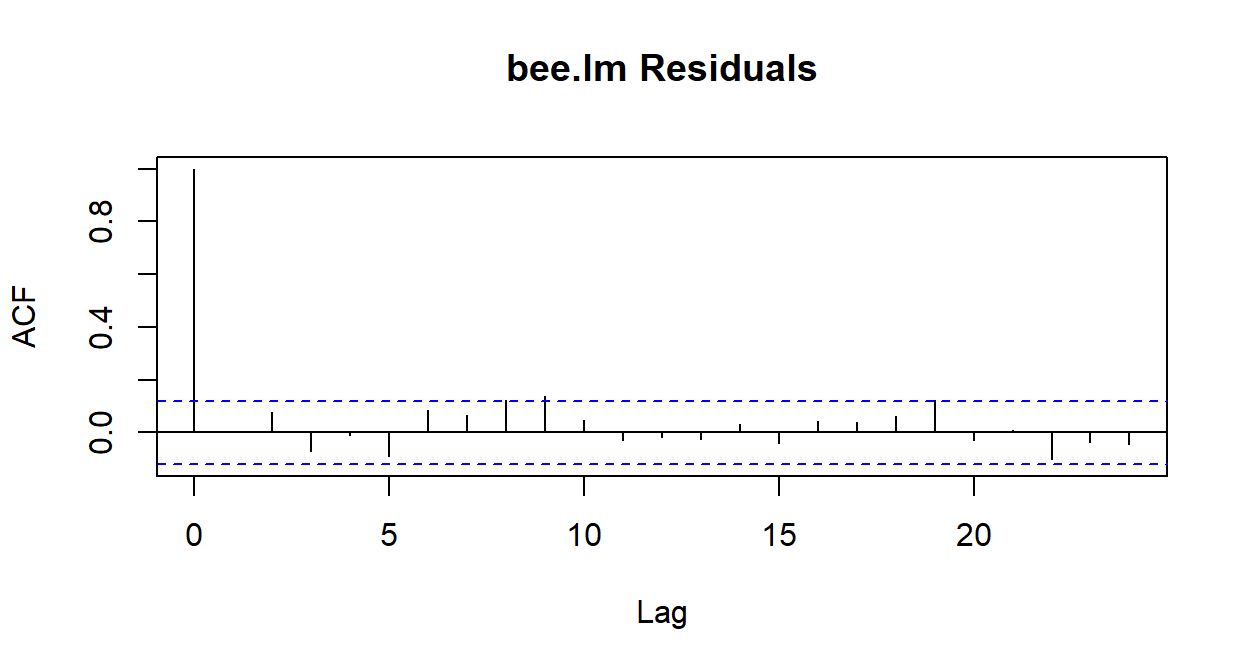

- Independence (of residuals)

- There is not a condition specifying a PDF that the residuals should follow.

6.4.3 Model Assessment

Which conditions can we check already?

What does type='response' mean?

This means that the residuals are reported on the same scale as the response variable (that is, in units of counts, rather than log(counts)).

beevisits <- beevisits %>%

mutate(pm.resids = resid(prm, type='response'),

pm.fitted = fitted(prm))

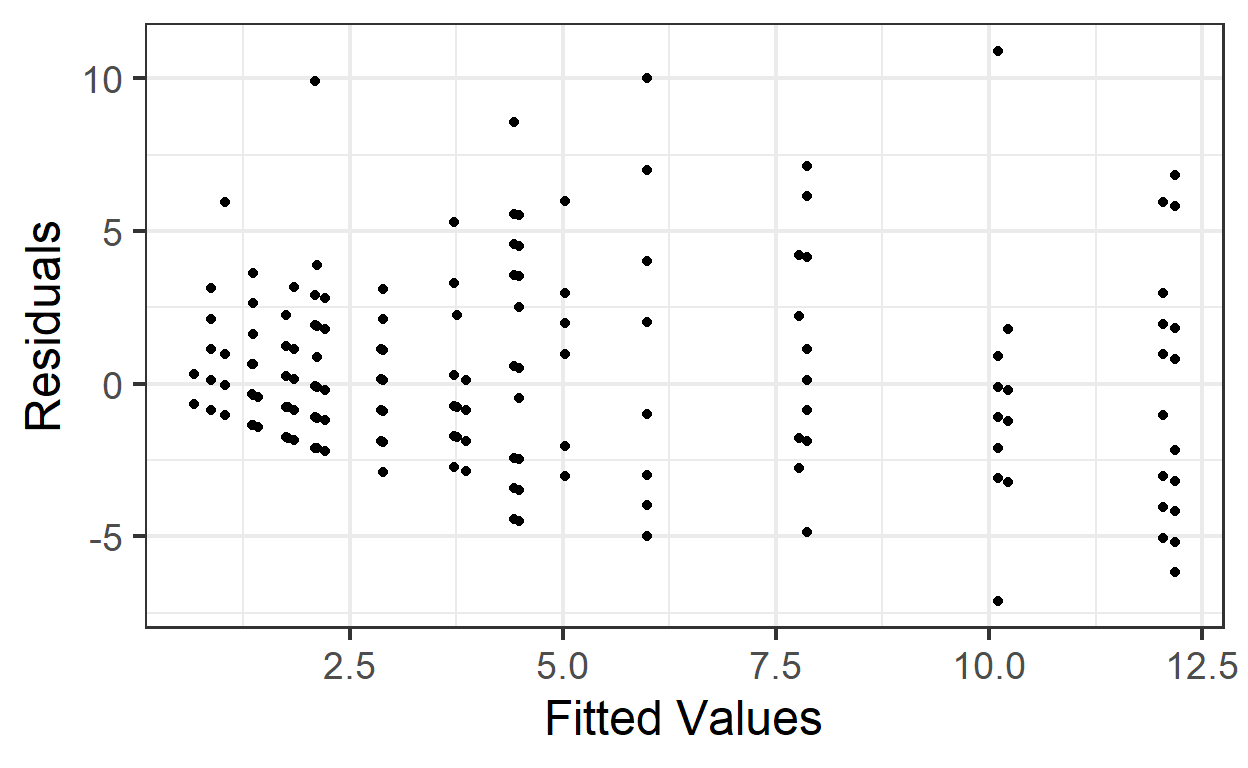

gf_point(pm.resids ~ pm.fitted, data=beevisits) %>%

gf_labs(x='Fitted Values', y='Residuals')

This trumpet pattern is what we expect! Why?

If the variance equals the mean, then as the mean (fitted value) goes up, then the variance (spread of residuals) will also be larger.

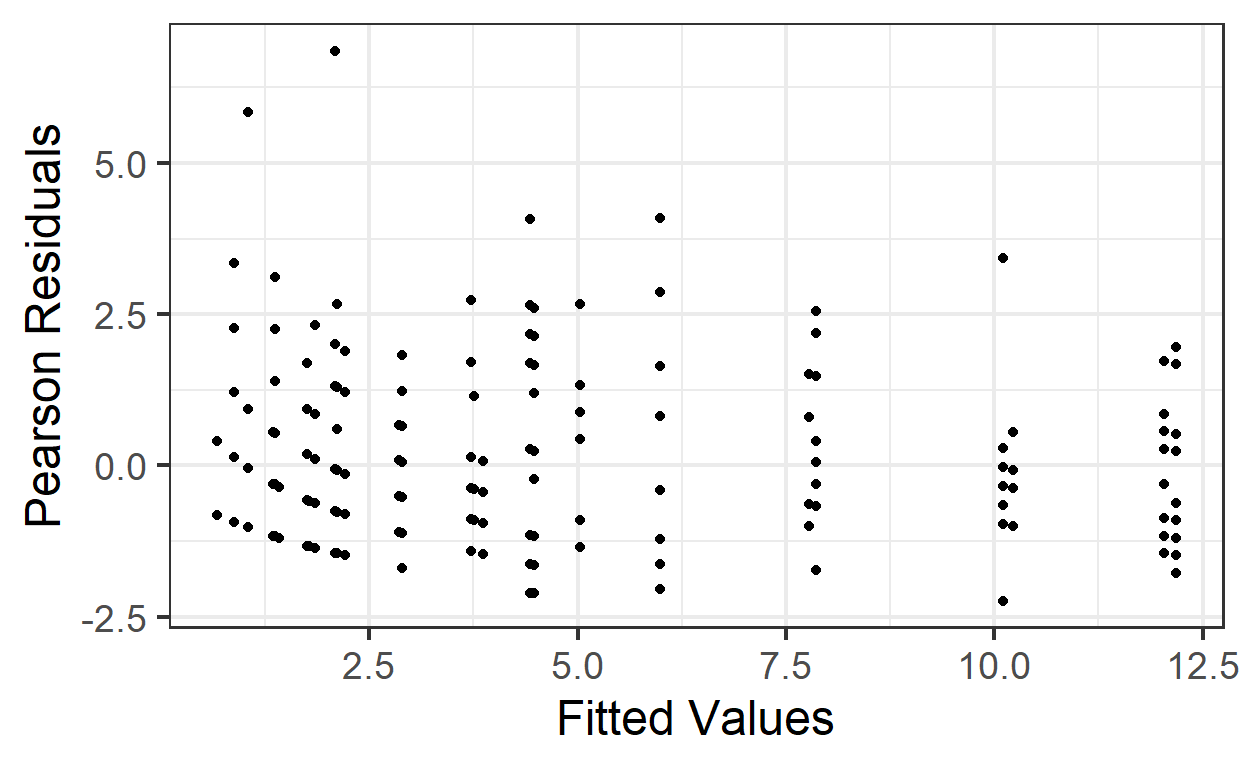

But how can we decide if it’s the right amount of increase in variance with increase in fitted value? We can compute Pearson residuals, which are scaled by the expected variance. The Pearson residuals should have approximately constant variance as a function of fitted values, and a standard deviation of about 1.

beevisits <- beevisits %>%

mutate(pm.pearson.resids = resid(prm, type='pearson'))

gf_point( pm.pearson.resids ~ pm.fitted, data=beevisits) %>%

gf_labs(x='Fitted Values', y='Pearson Residuals')

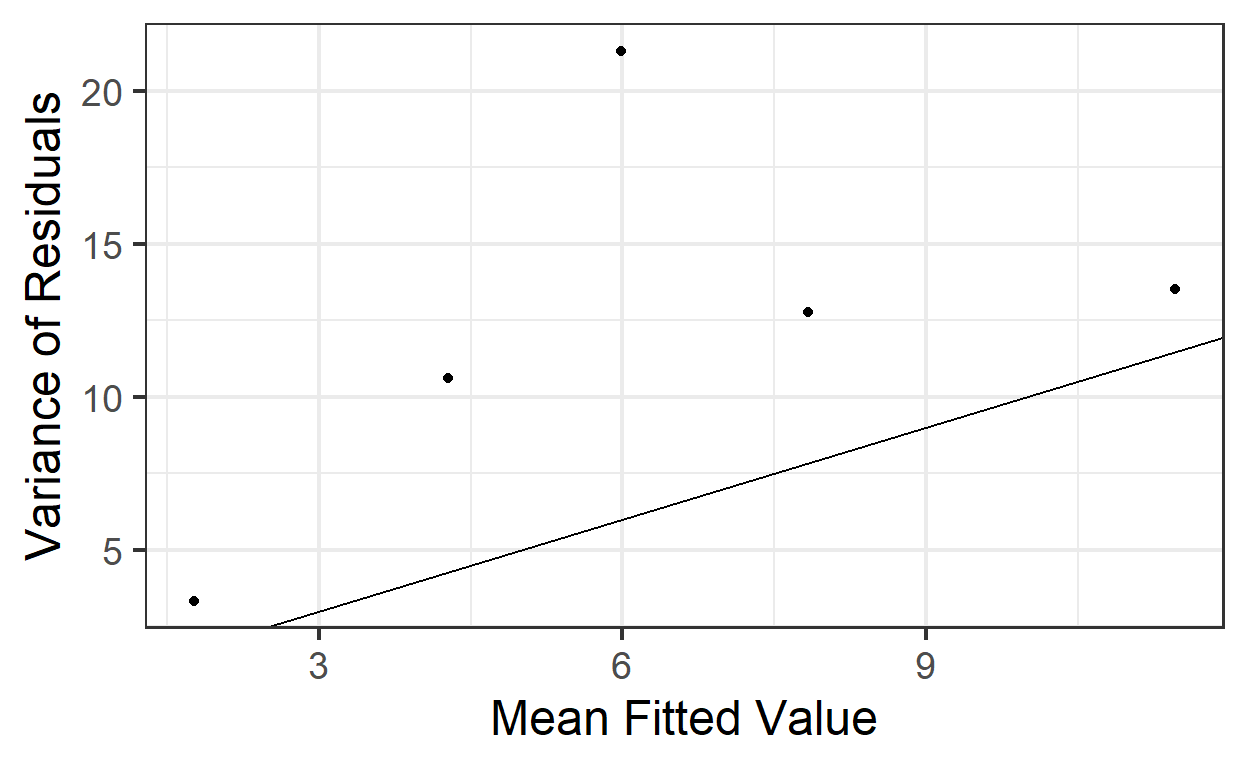

A more complex solution: we could divide the fitted values into bins (choice of bin size is somewhat arbitrary; generally, you want them as small as possible, but still containing enough observations per bin to get good mean and variance estimates). In each bin, compute the mean and the variance of the residuals. Plot these means vs these variances and see if the slope is 1 (and intercept 0).

First, split the fitted values into 5 bins:

## colony beename treatment target.colour novisits flower

## 1 Y giantbeeYsucroseyellow Sucrose yellow 12 target

## 2 W o51Wsucroseblue Sucrose blue 8 target

## 3 W o54Wquinineblue Quinine blue 13 target

## 4 W o58Wcelluloseyellow Cellulose yellow 9 target

## 5 W o60Wcelluloseblue Cellulose blue 11 target

## 6 W o63Wquinineblue Quinine blue 5 target

## lm.resids lm.fitted pm.resids pm.fitted pm.pearson.resids fitted.bins

## 1 3.559 8.44 4.136 7.86 1.475 (7.57,9.88]

## 2 -2.291 10.29 -4.177 12.18 -1.197 (9.88,12.2]

## 3 5.433 7.57 7.011 5.99 2.865 (5.27,7.57]

## 4 -1.255 10.26 -3.039 12.04 -0.876 (9.88,12.2]

## 5 0.745 10.26 -1.039 12.04 -0.300 (9.88,12.2]

## 6 -2.567 7.57 -0.989 5.99 -0.404 (5.27,7.57]Next, compute the means and variances in each bin, view the result, and plot:

binned.bees <- beevisits %>%

group_by(fitted.bins) %>%

summarise(mean = mean(pm.fitted, na.rm=TRUE),

var = var(pm.resids))

binned.bees## # A tibble: 5 x 3

## fitted.bins mean var

## <fct> <dbl> <dbl>

## 1 (0.661,2.97] 1.77 3.34

## 2 (2.97,5.27] 4.28 10.6

## 3 (5.27,7.57] 5.99 21.3

## 4 (7.57,9.88] 7.83 12.8

## 5 (9.88,12.2] 11.5 13.5gf_point(var ~ mean, data=binned.bees) %>%

gf_labs(x='Mean Fitted Value',

y='Variance of Residuals') %>%

gf_abline(slope=1, intercept=0)

That’s a bit of a pain, and still a judgment call at the end.

How else can we check the Mean = Variance condition?

6.4.4 Checking for overdispersion using overdispersion factor

We can estimate the overdispersion factor. This satisfies

\[ \text{residual variance} = \text{overdispersion factor} * \text{mean}\]

So if it’s a lot larger than 1, we have a problem. The function overdisp_fun() in package s245 computes an estimate:

## [1] 2.05Here, it’s about 2: not too terrible, but still, residual variance is double what it should be according to our model. (Usually if the overdispersion is larger than 2 we will prefer a different model that better accounts for the fact that \(\text{mean} \neq \text{variance}\).)

6.5 Accounting for overdispersion: Negative Binomial Models

Finally, we could simply fit a model that allows for a more permissive mean-variance relationship (where the variance can be larger or smaller than the mean, by different amounts), and see if it is a better fit to the data according it our IC. The negative binomial distributions (type I and II) do this:

require(glmmTMB)

nbm1 <- glmmTMB(novisits ~ flower + treatment +

colony, data=beevisits,

family=nbinom1(link='log'))

nbm2 <- glmmTMB(novisits ~ flower + treatment +

colony, data=beevisits,

family=nbinom2(link='log'))

summary(nbm1)## Family: nbinom1 ( log )

## Formula: novisits ~ flower + treatment + colony

## Data: beevisits

##

## AIC BIC logLik deviance df.resid

## 1183 1211 -583 1167 262

##

##

## Overdispersion parameter for nbinom1 family (): 1.07

##

## Conditional model:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 0.7173 0.1229 5.84 5.3e-09 ***

## flowernovel -0.7414 0.1450 -5.11 3.2e-07 ***

## flowertarget 1.0384 0.0981 10.58 < 2e-16 ***

## treatmentCellulose 0.7156 0.1117 6.41 1.5e-10 ***

## treatmentSucrose 0.7432 0.1115 6.67 2.6e-11 ***

## colonyX -0.1150 0.0997 -1.15 0.24904

## colonyY -0.3839 0.1015 -3.78 0.00016 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1## Family: nbinom2 ( log )

## Formula: novisits ~ flower + treatment + colony

## Data: beevisits

##

## AIC BIC logLik deviance df.resid

## 1207 1236 -596 1191 262

##

##

## Overdispersion parameter for nbinom2 family (): 4.69

##

## Conditional model:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 0.7354 0.1178 6.24 4.2e-10 ***

## flowernovel -0.7255 0.1269 -5.72 1.1e-08 ***

## flowertarget 1.0438 0.0998 10.46 < 2e-16 ***

## treatmentCellulose 0.7619 0.1123 6.79 1.2e-11 ***

## treatmentSucrose 0.7694 0.1142 6.73 1.6e-11 ***

## colonyX -0.1681 0.1084 -1.55 0.12

## colonyY -0.5153 0.1076 -4.79 1.7e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1We can use AIC or BIC-based model selection to decide which model fits best, between Poisson, NB1, and NB2 models.

## df AIC

## prm 7 1261

## nbm1 8 1183

## nbm2 8 1207We have a clear winner: NB1!

6.6 Accounting for overdispersion: quasi-Poisson Model

Or yet another option…in our class, we will not use quasi-Poisson models as much, as they are fitted via quasi-likelihood and this complicates model selection.

qprm <- glm(novisits ~ flower + treatment +

colony, data=beevisits,

family=quasipoisson(link='log'))

summary(qprm)##

## Call:

## glm(formula = novisits ~ flower + treatment + colony, family = quasipoisson(link = "log"),

## data = beevisits)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -2.994 -1.312 -0.386 0.779 4.702

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.790 0.124 6.35 9.1e-10 ***

## flowernovel -0.751 0.149 -5.02 9.3e-07 ***

## flowertarget 0.999 0.099 10.10 < 2e-16 ***

## treatmentCellulose 0.698 0.113 6.17 2.6e-09 ***

## treatmentSucrose 0.710 0.114 6.22 1.9e-09 ***

## colonyX -0.175 0.103 -1.71 0.089 .

## colonyY -0.437 0.105 -4.18 4.0e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for quasipoisson family taken to be 2.05)

##

## Null deviance: 1236.33 on 269 degrees of freedom

## Residual deviance: 542.05 on 263 degrees of freedom

## AIC: NA

##

## Number of Fisher Scoring iterations: 5We should not compare AIC with QAIC to compare two models, so to decide between Poisson and quasi-Poisson (if you ever use it) you must rely on the model assessment plots and the overdispersion factor to decide which is better.

6.7 Model selection with dredge() and (Q)AIC, BIC

Poisson and negative binomial models are fitted via maximum likelihood, so AIC or BIC may be used for model selection.

How can we use model selection criteria in a case where the likelihood can’t be computed exactly?

The quasi (log) likelihood is an approximation to the likelihood. It has some properties in common with a (log) likelihood, but is not a likelihood; we resort to using it in cases where the (log) likelihood can not even be evaluated.

With some code/mathematical gymnastics, we can use principles of quasi-likelihood to estimate QAIC (quasi-AIC, or AIC based on quasi-likelihood) in R for model selection.

6.7.1 Review: all subsets selection with dredge()

What if, instead of comparing two (or a few) specific models, we want to compare all possible models that contain some combination of a set of candidate covariates? The package MuMIn contains a function, dredge(), that takes as input a “full” model (one containing all the candidate covariates). It then computes AIC (or other model selection criteria) for all possible models containing subsets of the covariate and reports the results in a ranked table. For example, for our Poisson regression model, we could do:

require(MuMIn)

#have to make sure na.action is 'na.fail' for input model

prm <- update(prm, na.action='na.fail')

dredge(prm, rank='AIC')## Global model call: glm(formula = novisits ~ flower + treatment + colony, family = poisson(link = "log"),

## data = beevisits, na.action = "na.fail")

## ---

## Model selection table

## (Intrc) colny flowr trtmn df logLik AIC delta weight

## 8 0.790 + + + 7 -623 1261 0 1

## 7 0.643 + + 5 -642 1295 34 0

## 4 1.310 + + 5 -677 1364 104 0

## 3 1.156 + 3 -695 1396 136 0

## 6 1.124 + + 5 -899 1807 547 0

## 5 0.977 + 3 -918 1841 581 0

## 2 1.644 + 3 -953 1911 650 0

## 1 1.490 1 -970 1943 682 0

## Models ranked by AIC(x)## Global model call: glm(formula = novisits ~ flower + treatment + colony, family = poisson(link = "log"),

## data = beevisits, na.action = "na.fail")

## ---

## Model selection table

## (Intrc) colny flowr trtmn df logLik BIC delta weight

## 8 0.790 + + + 7 -623 1286 0.0 1

## 7 0.643 + + 5 -642 1313 26.8 0

## 4 1.310 + + 5 -677 1382 96.6 0

## 3 1.156 + 3 -695 1407 121.2 0

## 6 1.124 + + 5 -899 1825 539.5 0

## 5 0.977 + 3 -918 1852 566.3 0

## 2 1.644 + 3 -953 1922 636.0 0

## 1 1.490 1 -970 1946 660.7 0

## Models ranked by BIC(x)6.7.2 Review: IC “weights”

Note the last two columns of the dredge output: the “delta” (or \(\delta\)) AIC or BIC values and the “weights”. The \(\delta\)s are obtained by simply subtracting the best model’s IC value from that of each other model.

We already mentioned a rule of thumb: that the \(\delta IC\) should be at least 3 or to provide reasonable evidence that one model is really better than the other. Another way of measuring the differences between models is to use model weights. Theoretically, these measure the relative likelihoods of different models; you can think of them as giving the probability that a given model is the best-fitting one in the set of models examined, according to the IC being used.

Model weights are computed simply according to: \[ e^\frac{-\delta IC}{2}\]

And they sum to one for all models in a dredge().

6.7.3 Extending dredge() to quasi-Likelihood

With (rather a lot of) effort to define some custom functions, we can do the same thing for a quasi-Poisson model using quasi-AIC. Note: This idea is based on notes by Ben Bolker provided with the R package bbmle.

require(MuMIn)

# modify a glm() output object so that

# it contains a quasipoisson fit but the

# AIC (likelihood) from the equivalent regular Poisson model

x.quasipoisson <- function(...) {

res <- quasipoisson(...)

res$aic <- poisson(...)$aic

res

}

# function to extract the overdispersion parameter

# from a quasi model

dfun <- function(object) {

with(object,sum((weights * residuals^2)[weights > 0])/df.residual)

}

# function that modifies MuMIn::dredge()

# for use with quasi GLM

qdredge <- function(model, family='x.quasipoisson', na.action=na.fail, chat = dfun(model), rank='QAIC', ...){

model2 <- update(model, family=family, na.action=na.action)

(dt <- dredge(model2, rank=rank, chat=chat, ...))

}

#do "dredge" for model selection

qdredge(qprm)## Global model call: glm(formula = novisits ~ flower + treatment + colony, family = family,

## data = beevisits, na.action = na.action)

## ---

## Model selection table

## (Intrc) colny flowr trtmn df logLik rank delta weight

## 8 0.790 + + + 7 -623 625 0.0 0.999

## 7 0.643 + + 5 -642 640 14.6 0.001

## 4 1.310 + + 5 -677 674 48.7 0.000

## 3 1.156 + 3 -695 687 62.2 0.000

## 6 1.124 + + 5 -899 890 265.0 0.000

## 5 0.977 + 3 -918 904 279.6 0.000

## 2 1.644 + 3 -953 939 313.6 0.000

## 1 1.490 1 -970 952 327.1 0.000

## Models ranked by rank(x, chat = 2.04707063774377)Note: the qdredge() function is also provided for you in the package s245. So if you do want to use this, all you need to do is use s245::qdredge() instead of dredge().

6.8 Offsets

In our model, the response variable was the number of flowers visited by a bee, and each bee was in the same experimental setting for the same amount of time, so there was no need to account for effort or time spent in each case.

This is not always true: consider, for example:

- Dolphin surveys

- Bird counts

- Consider the schools and crime example from homework as well

In this case, it would be natural to adjust for effort. The intuitive way to do it would be to use counts per unit effort as the response variable:

\[ log(\frac{\lambda_i}{effort}) = \beta_0 + \dots \]

But notice that this is equivalent to including \(log(effort)\) as an ``offset" on the right hand side of the regression equation:

\[ log(\lambda_i) = \beta_0 + \dots + log(effort)\]

This is how we specify models with offsets in R:

Note: if you use dredge() with a model with an offset, be sure to specify the offset as a “fixed” term, i.e. a term that must be included in all models:

6.9 Prediction Plots

Once you have a “best” model, how do you interpret it?

When we went to great lengths to make prediction plots for a linear regression model so we could “see” the slope of the predicted relationship, you may have wondered: why bother? I can just look at the slope estimate and get the same insight!

Now, with a more complicated model equation with a link function, it’s not so easy. Now, we will really appreciate those prediction plots!

With the link function and the Poisson distribution, it is more challenging to interpret the coefficients of the model directly. The easiest way to understand the effects of different predictors is to look at plots of model predictions. However, as always we don’t want to plot fitted(model) as a function of each predictor in a model with more than one predictor; predictors other than the one we are interested in will influence the predictions, introducing extra variation. Instead, we will construct a new (fake) dataset to make predictions for, in which all predictors but the one we are interested in are held constant. For example, using our quasi-Poisson model and looking at the effect of colony (predicted values with 95% CIs):

newdata <- data.frame(colony=c('W', 'X', 'Y'),

flower='familiar',

target.colour='blue',

treatment='Cellulose')

pred = predict(qprm, newdata=newdata,

type='response',

se.fit=TRUE)

newdata <- newdata %>%

mutate(preds = pred$fit,

CIlow = pred$fit - 1.96*pred$se.fit,

CIup = pred$fit + 1.96*pred$se.fit)

gf_point(preds ~ colony, data=newdata) %>%

gf_labs(x='Colony', y='Predicted\nN. Visits') %>%

gf_errorbar(CIlow + CIup ~ colony, data=newdata)

If we had a quantitative predictor instead, the process would be similar, except when we define newdata, we would have to choose a range and granularity for which to make predictions. For example, imagine if bee length in mm was one of our predictors, and we wanted predictions for lengths between 0.5 and 3 mm (with a value every 0.05mm). We might begin:

newdata <- expand.grid(bee.length = seq(from=0.05, by=0.05, to=3),

colony=c('W'),

flower='familiar',

target.colour='blue',

treatment='Cellulose')

head(newdata)## bee.length colony flower target.colour treatment

## 1 0.05 W familiar blue Cellulose

## 2 0.10 W familiar blue Cellulose

## 3 0.15 W familiar blue Cellulose

## 4 0.20 W familiar blue Cellulose

## 5 0.25 W familiar blue Cellulose

## 6 0.30 W familiar blue CelluloseThen, when we make the plot, we would need to use gf\_ribbon() instead of gf\_errorbar() to show the CI.

We can also use pred_plot(fitted_model, 'variable_name') to make these plots with a lot less code (and if you need to know the values at which other predictors are fixed, use get_fixed(dataset)).